Table of Contents

Web applications pose unique security challenges to businesses and security professionals in that they expose the integrity of their data to the public. A solid 'extrastructure' is not a controllable criterion for any business. Stringent security must be placed around how users are managed (for example, in agreement with an 'appropriate use' policy) and controls must be commensurate with the value of the information protected. Exposure to public networks may require more robust security features than would normally be present in the internal 'corporate' environment that may have additional compensating security.

Several best practices have evolved across the Internet for the governance of public and private data in tiered approaches. In the most stringently secured systems, separate tiers differentiate between content presentation, security and control of the user session, and the downstream data storage services and protection. What is clear is that to secure private or confidential data, a firewall or 'packet filter' is no longer sufficient to provide for data integrity over a public interface.

Where it is possible, sensible, and economic, architectural solutions to security problems should be favored over technical band-aids. While it is possible to put "protections" in place for most critical data, a much better solution than protecting it is to simply keep it off systems connected to public networks. Thinking critically about what data is important and what worst-case scenarios might entail is central to securing web applications. Special attention should be given to the introduction of "choke" points at which data flows can be analyzed and anomalies quickly addressed.

Most firewalls do a decent job of appropriately filtering network packets of a certain construction to predefined data flow paths; however, many of the latest infiltrations of networks occur through the firewall using the ports that the firewall allows through by design or default. It remains critically important that only the content delivery services a firm wishes to provide are allowed to service incoming user requests. Firewalls alone cannot prevent a port-based attack (across an approved port) from succeeding when the targeted application has been poorly written or avoided input filters for the sake of the almighty performance gain. The tiered approach allows the architect the ability to move key pieces of the architecture into different 'compartments' such that the security registry that is not on the same platform as the data store or the content server. Because different services are contained in different 'compartments', a successful exploit of one container does not necessarily mean a total system compromise.

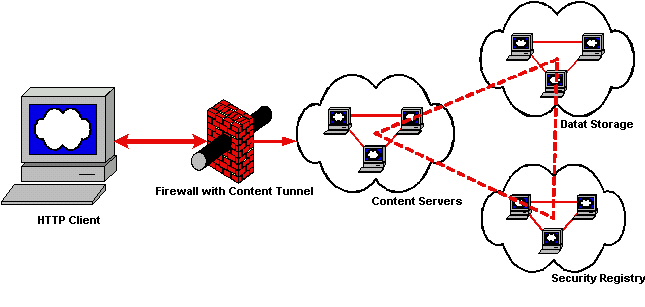

A typical tiered approach to security is presented for the presentation of data to public networks.

A standalone content server provides public access to static repositories. The content server is hosted on a 'hardened' platform in which only the required network listeners and services are running on the platform. Firewalls are optional but a very good idea.

Content services are separated from security repositories and downstream data storage because the use of user credentials is required. The principle at work is to place the controls and content in different compartments and protect the transmission of these confidential tokens using encryption. The user credentials are stored away from the content services and the data repositories such that a compromise of the web tier (content service) doesn't compromise the user registry or the data stores (although the user registry is commonly one of the collections of information in a data store). Segregating the "Security Registry" from the "Content Servers" also allows for more robust controls to be engineered into the functions that validate passwords, record user activity, and define authority roles to data, and additionally provides for some shared resource pooling for common activities such as maintaining a persistent database connection.

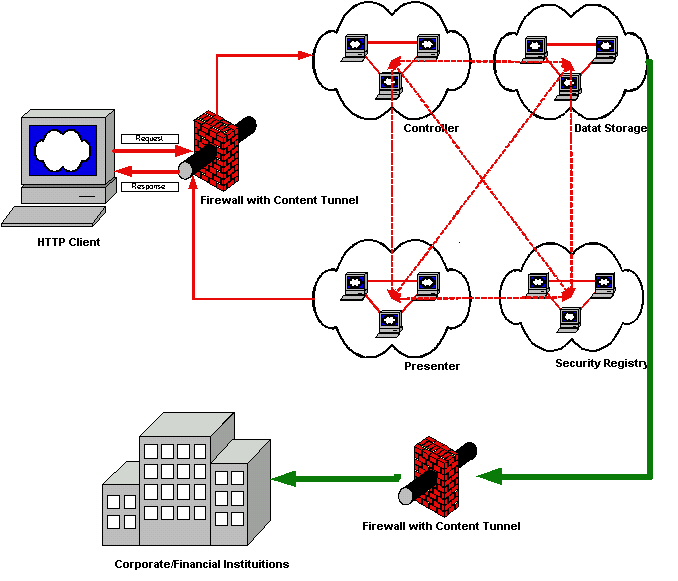

As an example, processing financial transactions typically requires a level of security that is more complex and stringent. Two tiers of firewalls may be needed as a minimal network control, and the content services may be further separated into presentation and control. Auditing of transactions may provide for an 'end-to-end' audit trail in which changes to financial transaction systems are logged with session keys that encapsulate the user identity, originating source network address, time-of-day and other information, and pass this information with the transaction data to the systems that clear the transactions with financial institutions. Encryption may be a requirement for electronic transmissions throughout each of the tiers of this architecture and for the storage of tokens, credentials and other sensitive information.

Digital signing of certain transactions may also be enforced if required by materiality, statutory or legal constraints. Defined conduits are also required between each of the tiers of the services to provide only for those protocols that are required by the architecture. Middleware is a key component; however, middle tier Application Servers can alternatively provide many of the services provided by traditional middleware.

In general, relying on the operating system for security services is not a good strategy. That is not to say the operating system is not expected to provide a secure operating environment. Services like authentication and authorization are generally not appropriately handled for an application by the operating system. Of course this flies in the face of Microsoft's .NET platform strategy and Sun's JAAS. There are times when it is appropriate, but in general you should abstract the security services you need away from the operating system. History shows that too many system compromises have been caused by applications with direct access to parts of the operating system. Kernels generally don't protect themselves. Thus if a bad enough security flaw is found in a part of the operating system, the whole operating system can be compromised and the applications fall victim to the attacker. If the purpose of an operating system is to provide a secure environment for running applications, exposing its security interfaces is not a strategically sound idea.

Web applications run on operating systems that depend on networks to share data with peers and service providers. Each layer of these services should build upon the layers below it. The bottom and fundamental layer of security and control is the network layer. Network controls can range from Access Control Lists at the minimalist approach to clustered stateful firewall solutions at the top end. The primary two types of commercial firewalls are proxy-based and packet inspectors, and the differences seem to be blurring with each new product release. The proxies now have packet inspection and the packet inspectors are supporting HTTP and SOCKS proxies.

Proxy firewalls primarily stop a transaction on one interface, inspect the packet in the application layer and then forward the packets out another interface. Proxy firewalls aren't necessarily dual-homed as they can be implemented solely to stop stateful sessions and provide the forwarding features on the same interface; however, the key feature of a proxy is that it breaks the state into two distinct phases. A key benefit of proxy-based solutions is that users may be forced to authenticate to the proxy before their request is serviced, thereby providing for a level of control that is stronger than that afforded simply by the requestor's TCP/IP address.

Packet inspectors receive incoming requests and attempt to match the header portions of packets (along with other possible feature sets) with known traffic signatures. If the traffic signatures match an 'allowed' rule the packets are allowed to pass through the firewall. If the traffic signatures match 'deny' rules, or they don't match 'allowed' rules, they should be rejected or dropped. Packet inspectors can be further broken into two categories: stateful and non-stateful. A stateful packet inspection firewall learns a session characteristic when the initial session is built after it passes the rulebase, and requires no return rule. The outbound and inbound rules must be programmed into a non-stateful packet inspection firewall.

Regardless of the firewall platform adopted for each specific business need, the general rule is to restrict traffic between web clients and web content servers by allowing only external inbound connections to be formed over ports 80 and 443. Additional firewall rulesets may be required to pass traffic between Application Servers and RDBMS engines such as port 1521. Segmenting the network and providing for routing 'chokes' and 'gateways' is the key to providing for robust security at the network layers.