This text was written by myself (Daniel Goscomb) for, and to be primarily published on www.dcode.net. All content is copyright 2003 dcode Limited. For more information on dcode's products and services please click here to go to our website.

PREVENTION OF THE OWASP TOP 10 IN PERL

Contents

1. Introduction

This paper aims to take the OWASP (The Open Web Application Security Project) Top 10 and discuss these vulnerabilities/flaws in relation to the Perl programming language.

From reading this paper people should become more aware of these flaws and also have at least a basic understanding of how they can be prevented from entering their Perl applications.

To do this each of the OWASP Top 10 list will be taken and the potential problems applicable to Perl will be discussed, and then a proposed solution to the problem will be given, using examples where appropriate.

2. OWASP Background

The Open Web Application Security Project (OWASP) is an Open Source community project staffed entirely by volunteers from across the world. The project is developing software tools and knowledge based documentation that helps people secure web applications and web services. Much of the work is driven by discussions on the Web Application Security list at SecurityFocus.com.

For further information please see http://www.owasp.org

3. The List

The following is the list taken from the OWASP website.

A1 - Unvalidated Parameters

Information from web requests is not validated before being used by a web application. Attackers can use these flaws to attack backside components through a web application.

A2 - Broken Access Control

Restrictions on what authenticated users are allowed to do are not properly enforced. Attackers can exploit these flaws to access other users' accounts, view sensitive files, or use unauthorized functions.

A3 - Broken Account and Session Management

Account credentials and session tokens are not properly protected. Attackers that can compromise passwords, keys, session cookies, or other tokens can defeat authentication restrictions and assume other users' identities.

A4 - Cross-Site Scripting (XSS) Flaws

The web application can be used as a mechanism to transport an attack to an end user's browser. A successful attack can disclose the end user's session token, attack the local machine, or spoof content to fool the user.

A5 - Buffer Overflows

Web application components in some languages that do not properly validate input can be crashed and, in some cases, used to take control of a process. These components can include CGI, libraries, drivers, and web application server components.

A6 - Command Injection Flaws

Web applications pass parameters when they access external systems or the local operating system. If an attacker can embed malicious commands in these parameters, the external system may execute those commands on behalf of the web application.

A7 - Error Handling Problems

Error conditions that occur during normal operation are not handled properly. If an attacker can cause errors to occur that the web application does not handle, they can gain detailed system information, deny service, cause security mechanisms to fail, or crash the server.

A8 - Insecure Use of Cryptography

Web applications frequently use cryptographic functions to protect information and credentials. These functions and the code to integrate them have proven difficult to code properly, frequently resulting in weak protection.

A9 - Remote Administration Flaws

Many web applications allow administrators to access the site using a web interface. If these administrative functions are not very carefully protected, an attacker can gain full access to all aspects of a site.

A10 - Web and Application Server Misconfiguration

Having a strong server configuration standard is critical to a secure web application. These servers have many configuration options that affect security and are not secure out of the box.

4. The Discussions

4.1 Unvalidated Parameters

Unvalidated parameters can cause large problems in the way an application processes data. If a parameter is not validated to see if it contains the correct amount and format of data, it means an attacker could potentially inject code in to these parameters. If the application is written incorrectly later down the line, this code could then be executed with the system privileges of the user that the application was executed with.

Parameter validation is, in theory, the primary line of defense within an application. If corrupt data cannot be inputted, it can not be used within the program. Therefore careful planning, consideration and testing should be taken when implementing this part of an application.

A number of developers will use java-script form validation functions. These are all well and good, as long as they are also backed up with validation within the application. Java-script is presented in full to the user's browser and can therefore be modified, or turned off completely. In other words, it can be by-passed and rendered completely useless.

Perl has a number of ways to collect data from HTML forms via GET or POST requests to the application. The two most commonly used are CGI.pm and the generic routine which turns the buffer in to a hash of name/value pairs. Neither of these does any parameter checking, as they are written for a wide variety of uses, and expect users to write their own validation routines in to their applications. As such, one can not be content with just using one of these methods and data must be validated using custom written routines. If thought about, the developers are the only ones who know what data should be accepted in to a specific application, how would the developers of CGI.pm know?

The best way to validate data in Perl is to use regular expressions. If not familiar with these a good book on the subject is the O'Reilly book, "Mastering Regular Expressions" (ISBN 1-56592-257-3). This is a very good resource for both beginner and accomplished coders, and provides information about a number of programming languages as well as Perl.

Below are a number of examples of how data validation with regular expressions will close some holes in applications.

Example 1 - SQL Injection

Imagine an application provides a form, to allow users to log-on to the application, that submits data to the application which looks up the user's details in an SQL database. There would most likely have a user name and a password field.

If there was no validation on the form the user could input anything he wishes in to those fields, which in turn would be sent to the application. If the application was using a badly written SQL statement to verify that the user name and password matched, such as (where $user and $password are the values of the fields inputted in to the form):

The user could potentially enter their password incorrectly to make SQL drop a table. Such a form entry would be:

If this code was executed, the entire user database would be lost. If the input was validated not to allow certain dangerous characters (such as ; in the case of SQL) then this query would not have been executed.

In this case, the application should have designed with the rule that the ; character should not be allowed in any user-inputted data that would interact with an SQL database. An even better thing to do is to specify what characters are available in each case, for example a-zA-Z0-9 for a password. If this was the case this attack could have easily prevented this with the following snippet of code:

Another way of patching this problem is by writing the SQL query properly using the DBI interface as its meant to be used.

Example 2 - File Handles

Another area where input validation is helpful is when working with files, more to the point the open() function, when the name of the file is taken in full or part from user input.

In the same methods as before, users could enter information to a form field which would be passed to the application. Say the entire filename was taken from a form input field and used in the code as such (where $file is from user input, and file should be opened as read-only):

Now, if the user were to enter something such as:

That file would be opened as a new file, erasing all data from the file. This, of course, is permissions permitting.

As well as validating the form input this can be avoided by ALWAYS specifying the mode you wish to open a file in. So the above code should be re-written as such:

This will make sure that no matter what is in the $file variable, it will always be opened in read-only mode.

Summary

As can be seen above, input validation is a very easy, powerful and useful technique to use. It should be written in to all applications whether they are complicated or not. This will help prevent someone exploiting an application. Beware, however, that it is not the be all and end all of application security!

4.2 Broken Access Control

Broken access control is when a user is authenticated but can then access parts of an application which they do not have the privileges to. The common fault here is when the application restricts access to certain areas through a obscurity method, e.g. only printing links to certain areas, while others are hidden unless a certain user is logged on. Unless the areas that are not shown in these links have their own access controls, a user of the application could easily access them by typing the URL in to their browser.

The best way to prevent this is by using sessions. Each time a page is loaded from the application the user's session should be checked and also their access rights to that particular area should be verified. If they are not authorised to access that area then an error message should be given and the application should terminate. This effectively means a user is authorised every time they click or load a page.

A number of considerations should be made when coding such a system. The main one of these is data security. Session information should not be easily predictable and should be tied to an IP address to reduce the possibility of session hi-jacking. Never should only one piece of information be used to authenticate the user, passing in just the user name would make it easy for someone to masquerade as another user as they only need this one piece of information, which could be readily available on some systems (such as forums).

To enable proper access control a method to track sessions will be needed. The most secure way of doing this is to hold session information on the server (in an SQL table for example) and set a session cookie with a limited amount of information contained in it, that can be matched with the information stored on the server each time it is passed back. Relying on the client to hold all session information is obviously a risk due to the fact that the data can be tampered with. To add increased security cookies should be encrypted with something like Crypt::Blowfish.

4.3 Broken Account and Session Management

This also ties in with the information above. Broken account management could be, for example when information on users stored in a database is not protected properly. For example this could be a weak password lookup function which requires little or no information to be used. In this case an attacker could easily find the information for another user and assume his identity.

There are numerous methods to protect against this. One of the obvious ones is cryptography. If passwords are hashed and encrypted then it would be hard to decode this back to its plain-text equivalent. Authentication is performed by using the same functions to process the inputted details and then matching them against those held.

Broken session management could also be caused by badly protected data. For example if pages hold information and these are cached then another user of the system or proxy server could easily read them, finding out details about users. For this reason session management tokens should never hold any account specific information and should ideally be encrypted.

Another type of attack that could be used here is a brute-force on the login fields. This can be prevented in a number of ways. One good one is an automatic lockout after a preset threshold of failed login attempts. Another is to ask for a number of letters from the password in a random fashion (i.e. different set of letter positions each time).

The main point here is data security. There should be no easy way to get to the data used to authenticate users (login or session) within an application.

4.4 Cross Site Scripting (XSS) Flaws

Cross site scripting flaws are mainly protected by input validation as discussed in section 4.1. The major problem with cross site scripting flaws is that an attacker can trick a user or a user's browser in to sending confidential information to them, or get the user's browser to display information which may lead them to believe something about the attacker which is not true.

Imagine the following scenario:

A store of some description is run on-line. When a user places an order they input their address details, etc. in to a form, so the store operators know where to send their order. When an order is complete the operator looks at all the information on a page in their own browser, which displays the address, etc. Now imagine that customer services also use this page to track customers orders and complaints.

The attacker could place an order and in one of the fields enter the following:

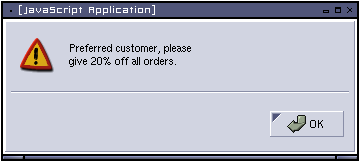

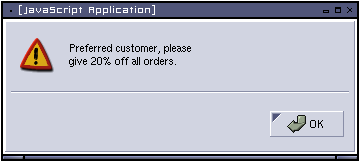

Now when the operator comes to process the attacker's order they will see the following:

This, if the operator thinks its a valid part of the software well may mean that the attacker gets a 20% discount... which they are not supposed to.

There are two ways to deal with these attacks. One is to remove all potential code with your input validation. However, this may be inappropriate as characters such as < and %gt; may be valid. In cases such as these, these characters should be converted to HTML safe ones, for example < should be changed to < and > should be changed to >

This is easily done with, again, regular expressions. The simplest of these are as follows:

Other characters can be made safe in the same way. Another way is to remove blocks of <script></script> from input completely. This can be done simply like so:

This will remove anything within a script block completely.

4.5 Buffer Overflows

Due to the nature of Perl's data structures Perl scripts are not generally susceptible to buffer overflow attacks. This is due to the fact that data structures are dynamically extended when needed. Perl keeps track of the allocated size and length of every string. Before a string is being written into, Perl ensures that enough space is available within the string, and extends that string if needed.

That said, however, there are some versions of Perl which are vulnerable to buffer overflow attacks. Most notably version 5.003. All versions of suidperl (now discontinued) built from versions earlier that 5.004 are also vulnerable to buffer overflows. Please see CERT Advisory CA-1997-17 available from http://www.cert.org

4.6 - Command Injection Flaws

This, again relates to section 4.1 (Unvalidated parameters). User inputted data passed directly to system calls which could invoke other commands.

As well as parameter validation there are a number of other techniques that can be used in Perl to prevent this outcome including proper use of the system calls.

Usually when calling the system() function a full path to the program to be executed would be specified and user input would be a parameter to that call. For example (where $file is from user input):

As discussed in section 4.1 this could be dangerous if a user inputted, for example, the following:

Obviously this would allow them to delete any file they wanted (permissions permitting). If system were invoked as it was meant to (i.e. with an array of parameters, the first one being the command name) then this would not be possible as system will only execute the command in the first parameter and take all others as arguments to that command. This could be done as such:

Another way to tackle this problem is to put only the commands used in a specific directory and execute Perl scripts using the apache suexec function with the SAFEPATH variable set to this directory. This way any other program anywhere on the system will not be able to be executed.

4.7 Error Handling Problems

It is imperative that errors are handled correctly and do not give out any unnecessary information to users of the application. A prime example of this is SQL errors. Imagine the floowing error message is generated at some point in the application:

This would give the user a lot of information about the way the system works, especially if he could cause this error all the time by inputting invalid data. He would be able to see the source of the query, therefore he would see all tables involved in that query, and some or all of the column names. For each error the user sees he will be able to collate the data to get a general idea of what the database structure is, and with that information he could possibly use SQL injection techniques to modify it, potentially raising his access rights on the system.

It is common practice to use error numbers and have these documented elsewhere, while also writing a full error message to a system log file for the administrator's use. This way the user will get an error message back giving them no information on the schematics of the application, and if he feels inclined to report this to the administrator he may still do so with a meaningful message.

Some applications will run all their code through the eval() function to trap any errors and send them to the STDOUT (i.e. the users browser). This can be dangerous as, although a small amount, some code can be presented to the user of the application. Some of this code may, for example, contain information such as database connection strings. This means that the attacker could then potentially log on to the database with full permission and modify it as they please.

There is no real need to do this. It is far safer to create an error 500 page on the web server using the "ErrorDocument 500 /path/to/file.html" directive to give a general script error. All information needed on the errors should be contained in the server's error logs. If it is desireable to add extra information to these logs simply print to STDERR in the error handling routines.

4.8 Insecure Use of Cryptography

Most applications will use cryptography at one point or another to store confidential information such as passwords. There are a number of points to take in to consideration when choosing the encryption algorithm to be used. The main one of these is will the data ever need to be read in its decrypted form? If the answer is no, one-way encryption should be used. This means that to authenticate the data the input will need to be encrypted and matched against the information held. However, if an attacker gets hold of the encrypted information they will not be able to decipher it, and therefore the only way to crack it is via the time-consuming brute-force method.

If the encrypted data does need to be read in its decrypted form then asymmetric cryptography should be used. This is the method in which different "keys" are used to encrypt and decrypt data. By keeping the keys completely separate data can be encrypted within the application which can only be deciphered with the private key held by the administrator. The private key can also be protected itself.

Many people will use weak cryptography which can be easily broken. Patterns which are easy to see or mathematically decipher from little information, for example, are not good enough. Once an attacker has a number of samples it should be easy to work out how the calculations are done and reverse them.

Another thing overlooked is the length and randomness of encryption keys. Many people will use short, easy to remember keys which are by no means random and may even be based on dictionary words, making them easy to crack using a brute-force cracker.

Perl has a number of Cryptography libraries available for use which can be downloaded from CPAN (http://www.cpan.org).

4.9 Remote Administration Flaws

Most web applications will contain certain functions to administer some aspects of the application. The discussion in 4.3 is also relevant here, due to the part on session management and authenticating on every click.

Ideally the administration portion of an application should be completely segregated from all other aspects of the system. It should be run from another area, and preferably access should be limited to certain IP addresses, or even better, from local nets only (i.e. use a VPN to tunnel to the machine). Also, login information should be held in separate tables to all other user information.

If this is not possible then heavy authentication must be used on all administrative pages. As discussed in section 4.3 every click a user makes should be authenticated against a user table.

As a further note, it is preferable to run all administration over an encrypted network. For example HTTPS or a VPN with no public access.

4.10 Web and Application Server Misconfiguration

This section is only really applicable for administrators of application servers. The information held in this section is specific to the apache web server, however it may be relevant to other server platforms.

The default configuration of most web servers is not secure, and should never be thought of as such. If the server is compiled with the default options there are a lot of modules in the finished binary that will probably never be used and are therefore only a hinderance in terms of security. For this reason any unused modules should be removed from the configuration.

There are also modules which can be added to apache which are either not in the default configuration (such as suexec) or that are not part of the apache distribution. There are many useful 3rd party apache modules such as mod_security from http://www.webkreator.com/mod_security/. This will add an extra layer of security to web applications.

As Perl is run as a CGI script via the web server it should always be run with the minimum permissions possible to perform its task. Suexec can help in this case by allowing it to be run as a user other than that of the default web server installation. Suexec will also warn of potential security issues due to incorrect file permissions.

Apache has a function which will allow users to run CGI scripts with certain extensions from anywhere in the DocumentRoot. This can be dangerous, especially with allowable directory browsing enabled on the server. People could find libraries for scripts in the same directory and read configuration directives for an application. This also brings up the point that all application configuration and any other files that need not be directly accessed by the user should NEVER be stored in the DocumentRoot but always outside it, preferably in directories not readable by other users on the system (this is especially important if operating within a shared hosting environment).

5. Conclusion

As can be seen here, most of the OWASP Top 10 list applies to Perl in one way or another. Although most of these problems can be prevented by using proper input validation, this should never be the only thing relied on to secure applications. All aspects of security that have been thought about, no matter how trivial they seem at the time.

Developers may not have permissions on servers to secure applications in the way they see fit. The only thing that can be done in this situation is to talk to the hosting provider and suggest that they secure their systems, or move to a dedicated hosting solution, in which one can do all their own administration.