The Open Web Application Security Project

Version 1.1 Final

Copyright � 2002 The Open Web Application Security Project (OWASP). All rights reserved.

Permission is granted to copy, distribute and/or modify this document under the terms of the GNU Free Documentation License, Version 1.1 or any later version published by the Free Software Foundation.

Sun Sep 22 2002

Table of Contents

- I. A Guide to Building Secure Web Applications

- 1. Introduction

- 2. Overview

- 3. How Much Security Do You Really Need?

- 4. Security Guidelines

- 5. Architecture

- 6. Authentication

- 7. Managing User Sessions

- 8. Access Control and Authorization

- 9. Event Logging

- 10. Data Validation

- 11. Preventing Common Problems

- 12. Privacy Considerations

- 13. Cryptography

- II. Appendixes

List of Tables

- 7.1. Structure Of A Cookie

- 11.1.

Table of Contents

- 1. Introduction

- 2. Overview

- 3. How Much Security Do You Really Need?

- 4. Security Guidelines

- 5. Architecture

- 6. Authentication

- 7. Managing User Sessions

- 8. Access Control and Authorization

- 9. Event Logging

- 10. Data Validation

- 11. Preventing Common Problems

- 12. Privacy Considerations

- 13. Cryptography

Table of Contents

We all use web applications everyday whether we consciously know it or not. That is, all of us who browse the web. That is all of us, right? The ubiquity of web applications is not always apparent to the everyday web user. When you go to cnn.com and the site automagically knows you are a US resident and serves you US news and local weather it's all because of a web application. When you need to transfer money, search for a flight, check out arrival times or even the latest sports scores during work, you probably do it using a web application. Web Applications and Web Services (web applications that describe what they do to other web applications) are the major force behind the next generation Internet. Sun and Microsoft with their Sun One and .NET strategies respectively, are gambling their entire business on them being a key infrastructure component of the Internet.

The last two years have seen a significant surge in the amount of web application specific vulnerabilities that are disclosed to the public. With the increasing concern around security in the wake of Sept 11th, 2001, questions continue to be raised about whether there is adequate protection for the ever-increasing array of sensitive data migrating its way to the web. To this day, not one web application technology has shown itself invulnerable to the inevitable discovery of vulnerabilities that affect its owners' and users' security and privacy.

Most security professionals have traditionally focused on network and operating system security. Assessment services have typically relied heavily on automated tools to help find holes in those layers. Those tools were developed by a few skilled technical people who only needed to have detailed knowledge and do research on a few operating systems. They often grew up with a copy of Windows NT at home or a Unix variant as a hobbyist and knew its workings inside and out. But today's needs are different. While the curious hobbyist going on security software developer can have a copy of Windows NT server and Microsoft's Internet Information Server running in his bedroom on his home PC, he can't have an online bookstore to play with and figure out what works and what doesn't.

While this document doesn't provide a silver bullet to cure all the ills, we hope it goes a long way in taking the first step towards helping people understand the inherent problems in web applications and build more secure web applications and Web Services in the future.

Kind Regards,

The OWASP Team

The Open Web Application Security Project (or OWASP--pronounced OH' WASP) was started in September of 2001. At the time there was no central place where developers and security professionals could learn how to build secure web applications or test the security of their products. At the same time the commercial marketplace for web applications started to evolve. Certain vendors were peddling some significant marketing claims around products that really only tested a small portion of the problems web applications were facing; and service companies were marketing application security testing that really left companies with a false sense of security.

OWASP is an open source reference point for system architects, developers, vendors, consumers and security professionals involved in Designing, Developing, Deploying and Testing the security of web applications and Web Services. In short, the Open Web Application Security Project aims to help everyone and anyone build more secure web applications and Web Services.

While several good documents are available to help developers write secure code, at the time of this project's conception there were no open source documents that described the wider technical picture of building appropriate security into web applications. This document sets out to describe technical components, and certain people, process, and management issues that are needed to design, build and maintain a secure web application. This document will be maintained as an ongoing exercise and expanded as time permits and the need arises.

Any document about building secure web applications clearly will have a large degree of technical content and address a technically oriented audience. We have deliberately not omitted technical detail that may scare some readers. However, throughout this document we have sought to refrain from "technical speak for the sake of technical speak" wherever possible.

This document is a designed to be used by as many people and in as many inventive ways as possible. While sections are logically arranged in a specific order, they can also be used alone or in conjunction with other discrete sections.

Here are just a few of the ways we envisage it being used:

When designing a system the system architect can use the document as a template to ensure he or she has thought about the implications that each of the sections described could have on his or her system.

When engaging professional services companies for web application security design or testing, it is extremely difficult to accurately gauge whether the company or its staff are qualified and if they intend to cover all of the items necessary to ensure an application (a) meets the security requirements specified or (b) will be tested adequately. We envisage companies being able to use this document to evaluate proposals from security consulting companies to determine whether they will provide adequate coverage in their work. Companies may also request services based on the sections specified in this document.

We anticipate security professionals and systems owners using this document as a template for testing. By a template we refer to using the sections outlined as a checklist or as the basis of a testing plan. Sections are split into a logical order for this purpose. Testing without requirements is of course an oxymoron. What do you test against? What are you testing for? If this document is used in this way, we anticipate a functional questionnaire of system requirements to drive the process. As a complement to this document, the OWASP Testing Framework group is working on a comprehensive web application methodology that covers both "white box" (source code analysis) and "black box" (penetration test) analysis.

This document is most definitely not a silver bullet! Web applications are almost all unique in their design and in their implementation. By covering all items in this document it may still be possible that you will have significant security vulnerabilities that have not been addressed. In short, this document is no guarantee of security. In its early iterations it may also not cover items that are important to you and your application environment. However, we do think it will go a long way toward helping the audience achieve their desired state.

If you are a subject matter expert, feel there is a section you would like included and are volunteering to author or are able to edit this document in any way, we want to hear from you. Please email [email protected] .

This document will be organic. As well as expanding the initial content, we hope to include other types of content in future releases. Currently the following topics are being considered:

Language Security

Java

C CGI

C#

PHP

Choosing Platforms

.NET

J2EE

Federated Authentication

MS Passport

Project Liberty

SAML

Error Handling

If you would like to see specific content or indeed would like to volunteer to write specific content we would love to hear from you. Please email <[email protected]>.

Table of Contents

In essence a Web Application is a client/server software application that interacts with users or other systems using HTTP. For a user the client would most likely be a web browser like Internet Explorer or Netscape Navigator; for another software application this would be an HTTP user agent that acts as an automated browser. The end user views web pages and is able to interact by sending choices to and from the system. The functions performed can range from relatively simple tasks like searching a local directory for a file or reference, to highly sophisticated applications that perform real-time sales and inventory management across multiple vendors, including both Business to Business and Business to Consumer e-commerce, workflow and supply chain management, and legacy applications. The technology behind web applications has developed at the speed of light. Traditionally simple applications were built with a common gateway interface application (CGI) typically running on the web server itself and often connecting to a simple database (again often on the same host). Modern applications typically are written in Java (or similar languages) and run on distributed application servers, connecting to multiple data sources through complex business logic tiers.

There is a lot of confusion about what a web application actually consists of. While it is true that the problems so often discovered and reported are product specific, they are really logic and design flaws in the application logic, and not necessarily flaws in the underlying web products themselves.

It can help to think of a web application as being made up of three logical tiers or functions.

Presentation Tiers are responsible for presenting the data to the end user or system. The web server serves up data and the web browser renders it into a readable form, which the user can then interpret. It also allows the user to interact by sending back parameters, which the web server can pass along to the application. This "Presentation Tier" includes web servers like Apache and Internet Information Server and web browsers like Internet Explorer and Netscape Navigator. It may also include application components that create the page layout.

The Application Tier is the "engine" of a web application. It performs the business logic; processing user input, making decisions, obtaining more data and presenting data to the Presentation Tier to send back to the user. The Application Tier may include technology like CGI's, Java, .NET services, PHP or ColdFusion, deployed in products like IBM WebSphere, WebLogic, JBOSS or ZEND.

A Data Tier is used to store things needed by the application and acts as a repository for both temporary and permanent data. It is the bank vault of a web application. Modern systems are typically now storing data in XML format for interoperability with other system and sources.

Of course, small applications may consist of a simple C CGI program running on a local host, reading or writing files to disk.

Web Services are receiving a lot of press attention. Some are heralding Web Services as the biggest technology breakthrough since the web itself; others are more skeptical that they are nothing more than evolved web applications.

A Web Service is a collection of functions that are packaged as a single entity and published to the network for use by other programs. Web services are building blocks for creating open distributed systems, and allow companies and individuals to quickly and cheaply make their digital assets available worldwide. One early example is Microsoft Passport, but many others such as Project Liberty are emerging. One Web Service may use another Web Service to build a richer set of features to the end user. Web services for car rental or air travel are examples. In the future applications may be built from Web services that are dynamically selected at runtime based on their cost, quality, and availability.

The power of Web Services comes from their ability to register themselves as being available for use using WSDL (Web Services Description Language) and UDDI (Universal Description, Discovery and Integration). Web services are based on XML (extensible Markup Language) and SOAP (Simple Object Access Protocol).

Despite whether you see the difference between sophisticated web applications and Web Services, it is clear that these emerging systems will face the same security issues as traditional web applications.

Table of Contents

When one talks about security of web applications, a prudent question to pose is "how much security does this project require?" Software is generally created with functionality first in mind and with security as a distant second or third. This is an unfortunate reality in many development shops. Designing a web application is an exercise in designing a system that meets a business need and not an exercise in building a system that is just secure for the sake of it. However, the application design and development stage is the ideal time to determine security needs and build assurance into the application. Prevention is better than cure, after all!

It is interesting to observe that most security products available today are mainly technical solutions that target a specific type of issue or problems or protocol weaknesses. They are products retrofitting security onto existing infrastructure, including tools like application layer firewalls and host/network based Intrusion Detection Systems (IDS's). Imagine a world without firewalls (nearly drifted into a John Lennon song there); if there were no need to retrofit security, then significant cost savings and security benefits would prevail right out of the box. Of course there are no silver bullets, and having multiple layers of security (otherwise known as "defense in depth") often makes sense.

So how do you figure out how much security is appropriate and needed? Well, before we discuss that it is worth reiterating a few important points.

Zero risk is not practical

There are several ways to mitigate risk

Don't spend a million bucks to protect a dime

People argue that the only secure host is one that's unplugged. Even if that were true, an unplugged host is of no functional use and so hardly a practical solution to the security problem. Zero risk is neither achievable nor practical. The goal should always be to determine what the appropriate level of security is for the application to function as planned in its environment. That process normally involves accepting risk.

The second point is that there are many ways to mitigate risk. While this document focuses predominantly on technical countermeasures like selecting appropriate key lengths in cryptography or validating user input, managing the risk may involve accepting it or transferring it. Insuring against the threat occurring or transferring the threat to another application to deal with (such as a Firewall) may be appropriate options for some business models.

The third point is that designers need to understand what they are securing, before they can appropriately specify security controls. It is all too easy to start specifying levels of security before understanding if the application actually needs it. Determining what the core information assets are is a key task in any web application design process. Security is almost always an overhead, either in cost or performance.

Pronunciation Key

risk

(risk)

n.

The possibility of suffering harm or loss; danger.

A factor, thing, element, or course involving uncertain danger; a hazard: "the usual risks of the desert: rattlesnakes, the heat, and lack of water" (Frank Clancy).

The danger or probability of loss to an insurer.

The amount that an insurance company stands to lose.

The variability of returns from an investment.

The chance of nonpayment of a debt.

One considered with respect to the possibility of loss: a poor risk.

threat

n.

An expression of an intention to inflict pain, injury, evil, or punishment.

An indication of impending danger or harm.

One that is regarded as a possible danger; a menace.

vul-ner-a-ble

adj.

Susceptible to physical or emotional injury.

Susceptible to attack: "We are vulnerable both by water and land, without either fleet or army" (Alexander Hamilton).

Open to censure or criticism; assailable.

Liable to succumb, as to persuasion or temptation.

Games. In a position to receive greater penalties or bonuses in a hand of bridge. In a rubber, used of the pair of players who score 100 points toward game.

An attacker (the "Threat") can exploit a Vulnerability (security bug in an application). Collectively this is a Risk.

While we firmly believe measuring risk is more art than science, it is nevertheless an important part of designing the overall security of a system. How many times have you been asked the question "Why should we spend X dollars on this?" Measuring risk generally takes either a qualitative or a quantitative approach.

A quantitative approach is usually more applicable in the realm of physical security or specific asset protection. Whichever approach is taken, however, a successful assessment of the risk is always dependent on asking the right questions. The process is only as good as its input.

A typical quantitative approach as described below can help analysts try to determine a dollar value of the assets (Asset Value or AV), associate a frequency rate (or Exposure Factor or EF) that the particular asset may be subjected to, and consequently determine a Single Loss Expectancy (SLE). From an Annualized Rate of Occurrence (ARO) you can determine the Annualized Loss Expectancy (ALE) of a particular asset and obtain a meaningful value for it.

Let's explain this in detail:

AV x EF = SLE

If our Asset Value is $1000 and our Exposure Factor (% of loss a realized threat could have on an asset) is 25% then we come out with the following figures:

$1000 x 25% = $250

So, our SLE is $250 per incident. To extrapolate that over a year we can apply another formula:

SLE x ARO = ALE (Annualized Loss Expectancy)

The ALE is the possibility of a specific threat taking place within a one-year time frame. You can define your own range, but for convenience sake let's say that the range is from 0.0 (never) to 1.0 (always). Working on this scale an ARO of 0.1 would indicate that the ARO value is once every ten years. So, going back to our formula, we have the following inputs:

SLE ($250) x ARO (0.1) = $25 (ALE)

Therefore, the cost to us on this particular asset per annum is $25. The benefits to us are obvious, we now have a tangible (or at the very least semi-tangible) cost to associate with protecting the asset. To protect the asset, we can put a safeguard in place up to the cost of $25 / annum.

Quantitative risk assessment is simple, eh? Well, sure, in theory, but actually coming up with those figures in the real world can be daunting and it does not naturally lend itself to software principles. The model described before was also overly simplified. A more realistic technique might be to take a qualitative approach. Qualitative risk assessments don't produce values or definitive answers. They help a designer or analyst narrow down scenarios and document thoughts through a logical process. We all typically undertake quantitative analysis in our minds on a regular basis.

Typically questions may include:

Do the threats come from external or internal parties?

What would the impact be if the software is unavailable?

What would be the impact if the system is compromised?

Is it a financial loss or one of reputation?

Would users actively look for bugs in the code to use to their advantage or can our licensing model prevent them from publishing them?

What logging is required?

What would the motivation be for people to try to break it (e.g. financial application for profit, marketing application for user database, etc.)

Tools such as the CERIAS CIRDB project (https://cirdb.cerias.purdue.edu/website) can significantly assist in the task of collecting good information incident related costs. The development of threat trees and workable security policies is a natural outgrowth of the above questions and should be developed for all critical systems.

Qualitative risk assessment is essentially not concerned with a monetary value but with scenarios of potential risks and ranking their potential to do harm. Qualitative risk assessments are subjective!

Table of Contents

The following high-level security principles are useful as reference points when designing systems.

User input and output to and from the system is the route for malicious payloads into or out of the system. All user input and user output should be checked to ensure it is both appropriate and expected. The correct strategy for dealing with system input and output is to allow only explicitly defined characteristics and drop all other data. If an input field is for a Social Security Number, then any data that is not a string of nine digits is not valid. A common mistake is to filter for specific strings or payloads in the belief that specific problems can be prevented. Imagine a firewall that allowed everything except a few special sequences of packets!

Any security mechanism should be designed in such a way that when it fails, it fails closed. That is to say, it should fail to a state that rejects all subsequent security requests rather than allows them. An example would be a user authentication system. If it is not able to process a request to authenticate a user or entity and the process crashes, further authentication requests should not return negative or null authentication criteria. A good analogy is a firewall. If a firewall fails it should drop all subsequent packets.

While it is tempting to build elaborate and complex security controls, the reality is that if a security system is too complex for its user base, it will either not be used or users will try to find measures to bypass it. Often the most effective security is the simplest security. Do not expect users to enter 12 passwords and let the system ask for a random number password for instance! This message applies equally to tasks that an administrator must perform in order to secure an application. Do not expect an administrator to correctly set a thousand individual security settings, or to search through dozens of layers of dialog boxes to understand existing security settings. Similarly this message is also intended for security layer API's that application developers must use to build the system. If the steps to properly secure a function or module of the application are too complex, the odds that the steps will not be properly followed increase greatly.

Invariably other system designers (either on your development team or on the Internet) have faced the same problems as you. They may have invested large amounts of time researching and developing robust solutions to the problem. In many cases they will have improved components through an iterative process and learned from common mistakes along the way. Using and reusing trusted components makes sense both from a resource stance and from a security stance. When someone else has proven they got it right, take advantage of it.

Relying on one component to perform its function 100% of the time is unrealistic. While we hope to build software and hardware that works as planned, predicting the unexpected is difficult. Good systems don't predict the unexpected, but plan for it. If one component fails to catch a security event, a second one should catch it.

We've all seen it, "This system is 100% secure, it uses 128bit SSL". While it may be true that the data in transit from the user's browser to the web server has appropriate security controls, more often than not the focus of security mechanisms is at the wrong place. As in the real world where there is no point in placing all of one's locks on one's front door to leave the back door swinging in its hinges, careful thought must be given to what one is securing. Attackers are lazy and will find the weakest point and attempt to exploit it.

It's naive to think that hiding things from prying eyes doesn't buy some amount of time. Let's face it, some of the biggest exploits unveiled in software have been obscured for years. But obscuring information is very different from protecting it. You are relying on the premise that no one will stumble onto your obfuscation. This strategy doesn't work in the long term and has no guarantee of working in the short term.

Systems should be designed in such a way that they run with the least amount of system privilege they need to do their job. This is the "need to know" approach. If a user account doesn't need root privileges to operate, don't assign them in the anticipation they may need them. Giving the pool man an unlimited bank account to buy the chemicals for your pool while you're on vacation is unlikely to be a positive experience.

Similarly, compartmentalizing users, processes and data helps contain problems if they do occur. Compartmentalization is an important concept widely adopted in the information security realm. Imagine the same pool man scenario. Giving the pool man the keys to the house while you are away so he can get to the pool house, may not be a wise move. Granting him access only to the pool house limits the types of problems he could cause.

Table of Contents

Web applications pose unique security challenges to businesses and security professionals in that they expose the integrity of their data to the public. A solid 'extrastructure' is not a controllable criterion for any business. Stringent security must be placed around how users are managed (for example, in agreement with an 'appropriate use' policy) and controls must be commensurate with the value of the information protected. Exposure to public networks may require more robust security features than would normally be present in the internal 'corporate' environment that may have additional compensating security.

Several best practices have evolved across the Internet for the governance of public and private data in tiered approaches. In the most stringently secured systems, separate tiers differentiate between content presentation, security and control of the user session, and the downstream data storage services and protection. What is clear is that to secure private or confidential data, a firewall or 'packet filter' is no longer sufficient to provide for data integrity over a public interface.

Where it is possible, sensible, and economic, architectural solutions to security problems should be favored over technical band-aids. While it is possible to put "protections" in place for most critical data, a much better solution than protecting it is to simply keep it off systems connected to public networks. Thinking critically about what data is important and what worst-case scenarios might entail is central to securing web applications. Special attention should be given to the introduction of "choke" points at which data flows can be analyzed and anomalies quickly addressed.

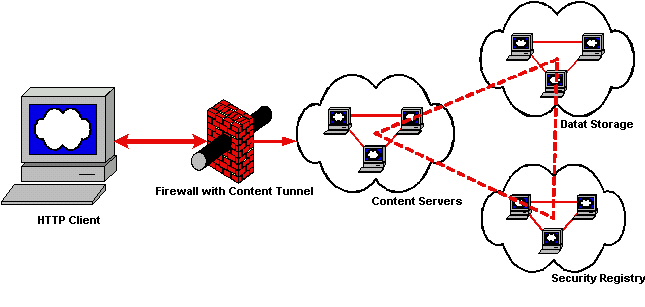

Most firewalls do a decent job of appropriately filtering network packets of a certain construction to predefined data flow paths; however, many of the latest infiltrations of networks occur through the firewall using the ports that the firewall allows through by design or default. It remains critically important that only the content delivery services a firm wishes to provide are allowed to service incoming user requests. Firewalls alone cannot prevent a port-based attack (across an approved port) from succeeding when the targeted application has been poorly written or avoided input filters for the sake of the almighty performance gain. The tiered approach allows the architect the ability to move key pieces of the architecture into different 'compartments' such that the security registry that is not on the same platform as the data store or the content server. Because different services are contained in different 'compartments', a successful exploit of one container does not necessarily mean a total system compromise.

A typical tiered approach to security is presented for the presentation of data to public networks.

A standalone content server provides public access to static repositories. The content server is hosted on a 'hardened' platform in which only the required network listeners and services are running on the platform. Firewalls are optional but a very good idea.

Content services are separated from security repositories and downstream data storage because the use of user credentials is required. The principle at work is to place the controls and content in different compartments and protect the transmission of these confidential tokens using encryption. The user credentials are stored away from the content services and the data repositories such that a compromise of the web tier (content service) doesn't compromise the user registry or the data stores (although the user registry is commonly one of the collections of information in a data store). Segregating the "Security Registry" from the "Content Servers" also allows for more robust controls to be engineered into the functions that validate passwords, record user activity, and define authority roles to data, and additionally provides for some shared resource pooling for common activities such as maintaining a persistent database connection.

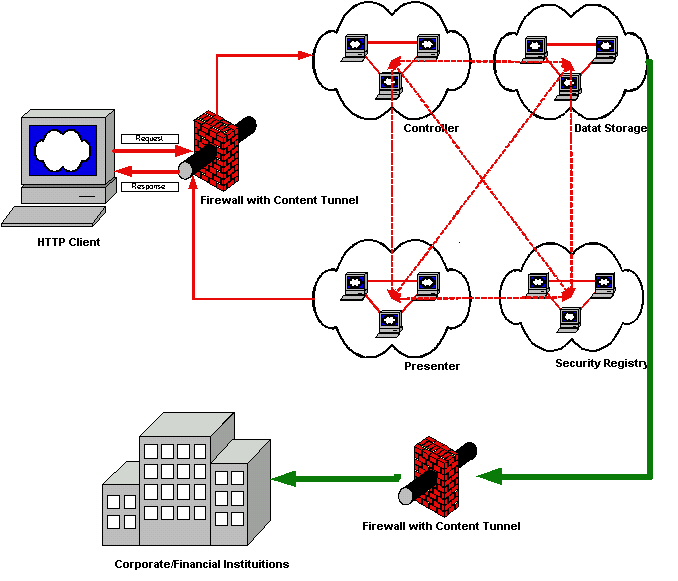

As an example, processing financial transactions typically requires a level of security that is more complex and stringent. Two tiers of firewalls may be needed as a minimal network control, and the content services may be further separated into presentation and control. Auditing of transactions may provide for an 'end-to-end' audit trail in which changes to financial transaction systems are logged with session keys that encapsulate the user identity, originating source network address, time-of-day and other information, and pass this information with the transaction data to the systems that clear the transactions with financial institutions. Encryption may be a requirement for electronic transmissions throughout each of the tiers of this architecture and for the storage of tokens, credentials and other sensitive information.

Digital signing of certain transactions may also be enforced if required by materiality, statutory or legal constraints. Defined conduits are also required between each of the tiers of the services to provide only for those protocols that are required by the architecture. Middleware is a key component; however, middle tier Application Servers can alternatively provide many of the services provided by traditional middleware.

In general, relying on the operating system for security services is not a good strategy. That is not to say the operating system is not expected to provide a secure operating environment. Services like authentication and authorization are generally not appropriately handled for an application by the operating system. Of course this flies in the face of Microsoft's .NET platform strategy and Sun's JAAS. There are times when it is appropriate, but in general you should abstract the security services you need away from the operating system. History shows that too many system compromises have been caused by applications with direct access to parts of the operating system. Kernels generally don't protect themselves. Thus if a bad enough security flaw is found in a part of the operating system, the whole operating system can be compromised and the applications fall victim to the attacker. If the purpose of an operating system is to provide a secure environment for running applications, exposing its security interfaces is not a strategically sound idea.

Web applications run on operating systems that depend on networks to share data with peers and service providers. Each layer of these services should build upon the layers below it. The bottom and fundamental layer of security and control is the network layer. Network controls can range from Access Control Lists at the minimalist approach to clustered stateful firewall solutions at the top end. The primary two types of commercial firewalls are proxy-based and packet inspectors, and the differences seem to be blurring with each new product release. The proxies now have packet inspection and the packet inspectors are supporting HTTP and SOCKS proxies.

Proxy firewalls primarily stop a transaction on one interface, inspect the packet in the application layer and then forward the packets out another interface. Proxy firewalls aren't necessarily dual-homed as they can be implemented solely to stop stateful sessions and provide the forwarding features on the same interface; however, the key feature of a proxy is that it breaks the state into two distinct phases. A key benefit of proxy-based solutions is that users may be forced to authenticate to the proxy before their request is serviced, thereby providing for a level of control that is stronger than that afforded simply by the requestor's TCP/IP address.

Packet inspectors receive incoming requests and attempt to match the header portions of packets (along with other possible feature sets) with known traffic signatures. If the traffic signatures match an 'allowed' rule the packets are allowed to pass through the firewall. If the traffic signatures match 'deny' rules, or they don't match 'allowed' rules, they should be rejected or dropped. Packet inspectors can be further broken into two categories: stateful and non-stateful. A stateful packet inspection firewall learns a session characteristic when the initial session is built after it passes the rulebase, and requires no return rule. The outbound and inbound rules must be programmed into a non-stateful packet inspection firewall.

Regardless of the firewall platform adopted for each specific business need, the general rule is to restrict traffic between web clients and web content servers by allowing only external inbound connections to be formed over ports 80 and 443. Additional firewall rulesets may be required to pass traffic between Application Servers and RDBMS engines such as port 1521. Segmenting the network and providing for routing 'chokes' and 'gateways' is the key to providing for robust security at the network layers.

Table of Contents

Authentication is the process of determining if a user or entity is who he/she claims to be.

In a web application it is easy to confuse authentication and session management (dealt with in a later section). Users are typically authenticated by a username and password or similar mechanism. When authenticated, a session token is usually placed into the user's browser (stored in a cookie). This allows the browser to send a token each time a request is being made, thus performing entity authentication on the browser. The act of user authentication usually takes place only once per session, but entity authentication takes place with every request.

As mentioned there are principally two types of authentication and it is worth understanding the two types and determining which you really need to be doing.

User Authentication is the process of determining that a user is who he/she claims to be.

Entity authentication is the process of determining if an entity is who it claims to be.

Imagine a scenario where an Internet bank authenticates a user initially (user authentication) and then manages sessions with session cookies (entity authentication). If the user now wishes to transfer a large sum of money to another account 2 hours after logging on, it may be reasonable to expect the system to re-authenticate the user!

When reading the following sections on the possible means of providing authentication mechanisms, it should be firmly in the mind of the reader that ALL data sent to clients over public links should be considered "tainted" and all input should be rigorously checked. SSL will not solve problems of authentication nor will it protect data once it has reached the client. Consider all input hostile until proven otherwise and code accordingly.

There are several ways to do user authentication over HTTP. The simplest is referred to as HTTP Basic authentication. When a request is made to a URI, the web server returns a HTTP 401 unauthorized status code to the client:

HTTP/1.1 401 Authorization Required

This tells the client to supply a username and password. Included in the 401 status code is the authentication header. The client requests the username and password from the user, typically in a dialog box. The client browser concatenates the username and password using a ":" separator and base 64 encodes the string. A second request is then made for the same resource including the encoded username password string in the authorization headers.

HTTP authentication has a problem in that there is no mechanism available to the server to cause the browser to 'logout'; that is, to discard its stored credentials for the user. This presents a problem for any web application that may be used from a shared user agent.

The username and password of course travel in effective clear-text in this process and the system designers need to provide transport security to protect it in transit. SSL or TLS are the most common ways of providing confidentiality and integrity in transit for web applications.

There are two forms of HTTP Digest authentication that were designed to prevent the problem of username and password being interceptable. The original digest specification was developed as an extension to HTTP 1.0, with an improved scheme defined for HTTP 1.1. Given that the original digest scheme can work over HTTP 1.0 and HTTP 1.1 we will describe both for completeness. The purpose of digest authentication schemes is to allow users to prove they know a password without disclosing the actual password. The Digest Authentication Mechanism was originally developed to provide a general use, simple implementation, authentication mechanism that could be used over unencrypted channels.

As can be seen by the figure above, an important part of ensuring security is the addition of the data sent by the server when setting up digest authentication. If no unique data were supplied for request, an attacker would simply be able to replay the digest or hash.

The authentication process begins with a 401 Unauthorized response as with basic authentication. An additional header WWW-Authenticate header is added that explicitly requests digest authentication. A nonce is generated (the data) and the digest computed. The actual calculation is as follows:

String "A1" consists of username, realm, password concatenated with colons.

owasp:[email protected]:password

Calculate MD5 hash of this string and represent the 128 bit output in hex

String "A2" consists of method and URI

GET:/guide/index.shtml

Calculate MD5 of "A2" and represent output in ASCII.

Concatenate A1 with nonce and A2 using colons

Compute MD5 of this string and represent it in ASCII

This is the final digest value sent.

As mentioned HTTP 1.1 specified an improved digest scheme that has additional protection for

Replay attacks

Mutual authentication

Integrity protection

The digest scheme in HTTP 1.0 is susceptible to replay attacks. This occurs because an attacker can replay the correctly calculated digest for the same resource. In effect the attacker sends the same request to the server. The improved digest scheme of HTTP 1.1 includes a NC parameter or a nonce count into the authorization header. This eight digit number represented in hex increments each time the client makes a request with the same nonce. The server must check to ensure the nc is greater than the last nc value it received and thus not honor replayed requests.

Other significant improvements of the HTTP 1.1 scheme are mutual authentication, enabling clients to also authenticate servers as well as allowing servers to authenticate clients and integrity protection.

Rather than relying on authentication at the protocol level, web based applications can use code embedded in the web pages themselves. Specifically, developers have previously used HTML FORMs to request the authentication credentials (this is supported by the TYPE=PASSWORD input element). This allows a designer to present the request for credentials (Username and Password) as a normal part of the application and with all the HTML capabilities for internationalization and accessibility.

While dealt with in more detail in a later section it is essential that authentication forms are submitted using a POST request. GET requests show up in the user's browser history and therefore the username and password may be visible to other users of the same browser.

Of course schemes using forms-based authentication need to implement their own protection against the classic protocol attacks described here and build suitable secure storage of the encrypted password repository.

A common scheme with Web applications is to prefill form fields for users whenever possible. A user returning to an application may wish to confirm his profile information, for example. Most applications will prefill a form with the current information and then simply require the user to alter the data where it is inaccurate. Password fields, however, should never be prefilled for a user. The best approach is to have a blank password field asking the user to confirm his current password and then two password fields to enter and confirm a new password. Most often, the ability to change a password should be on a page separate from that for changing other profile information.

This approach offers two advantages. Users may carelessly leave a prefilled form on their screen allowing someone with physical access to see the password by viewing the source of the page. Also, should the application allow (through some other security failure) another user to see a page with a prefilled password for an account other than his own, a "View Source" would again reveal the password in plain text. Security in depth means protecting a page as best you can, assuming other protections will fail.

Note: Forms based authentication requires the system designers to create an authentication protocol taking into account the same problems that HTTP Digest authentication was created to deal with. Specifically, the designer should remember that forms submitted using GET or POST will send the username and password in effective clear-text, unless SSL is used.

Both SSL and TLS can provide client, server and mutual entity authentication. Detailed descriptions of the mechanisms can be found in the SSL and TLS sections of this document. Digital certificates are a mechanism to authenticate the providing system and also provide a mechanism for distributing public keys for use in cryptographic exchanges (including user authentication if necessary). Various certificate formats are in use. By far the most widely accepted is the International Telecommunication Union's X509 v3 certificate (refer to RFC 2459). Another common cryptographic messaging protocol is PGP. Although parts of the commercial PGP product (no longer available from Network Associates) are proprietary, the OpenPGP Alliance (http://www.openPGP.org) represents groups who implement the OpenPGP standard (refer to RFC 2440).

The most common usage for digital certificates on web systems is for entity authentication when attempting to connect to a secure web site (SSL). Most web sites work purely on the premise of server side authentication even though client side authentication is available. This is due to the scarcity of client side certificates and in the current web deployment model this relies on users to obtain their own personal certificates from a trusted vendor; and this hasn't really happened on any kind of large scale.

For high security systems, client side authentication is a must and as such a certificate issuance scheme (PKI) might need to be deployed. Further, if individual user level authentication is required, then 2-factor authentication will be necessary.

There is a range of issues concerned with the use of digital certificates that should be addressed:

Where is the root of trust? That is, at some point the digital certificate must be signed; who is trusted to sign the certificate? Commercial organizations provide such a service identifying degrees of rigor in identification of the providing parties, permissible trust and liability accepted by the third party. For many uses this may be acceptable, but for high-risk systems it may be necessary to define an in-house Public Key Infrastructure.

Certificate management: who can generate the key pairs and send them to the signing authority?

What is the Naming convention for the distinguished name tied to the certificate?

What is the revocation/suspension process?

What is the key recovery infrastructure process?

Many other issues in the use of certificates must be addressed, but the architecture of a PKI is beyond the scope of this document.

Cookies are often used to authenticate the user's browser as part of session management mechanisms. This is discussed in detail in the session management section of this document.

The referer [sic] header is sent with a client request to show where the client obtained the URI. On the face of it, this may appear to be a convenient way to determine that a user has followed a path through an application or been referred from a trusted domain. However, the referer is implemented by the user's browser and is therefore chosen by the user. Referers can be changed at will and therefore should never be used for authentication purposes.

There are many times when applications need to authenticate other hosts or applications. IP addresses or DNS names may appear like a convenient way to do this. However the inherent insecurities of DNS mean that this should be used as a cursory check only, and as a last resort.

Usernames and passwords are the most common form of authentication in use today. Despite the improved mechanisms over which authentication information can be carried (like HTTP Digest and client side certificates), most systems usually require a password as the token against which initial authorization is performed. Due to the conflicting goals that good password maintenance schemes must meet, passwords are often the weakest link in an authentication architecture. More often than not, this is due to human and policy factors and can be only partially addressed by technical remedies. Some best practices are outlined here, as well as risks and benefits for each countermeasure. As always, those implementing authentication systems should measure risks and benefits against an appropriate threat model and protection target.

While usernames have few requirements for security, a system implementor may wish to place some basic restriction on the username. Usernames that are derivations of a real name or actual real names can clearly give personal detail clues to an attacker. Other usernames like social security numbers or tax ID's may have legal implications. Email addresses are not good usernames for the reason stated in the Password Lockout section.

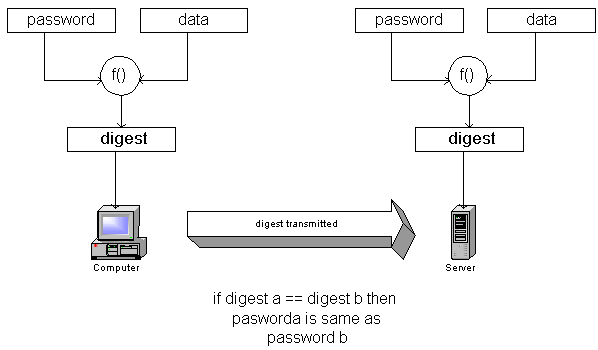

In all password schemes the system must maintain storage of usernames and corresponding passwords to be used in the authentication process. This is still true for web applications that use the built in data store of operating systems like Windows NT. This store should be secure. By secure we mean the passwords should be stored in such a way that the application can compute and compare passwords presented to it as part of an authentication scheme, but the database should not be able to be used or read by administrative users or by an adversary who manages to compromise the system. Hashing the passwords with a simple hash algorithm like SHA-1 is a commonly used technique.

Password quality refers to the entropy of a password and is clearly essential to ensure the security of the users' accounts. A password of "password" is obviously a bad thing. A good password is one that is impossible to guess. That typically is a password of at least 8 characters, one alphanumeric, one mixed case and at least one special character (not A-Z or 0-9). In web applications special care needs to be taken with meta-characters.

If an attacker is able to guess passwords without the account becoming disabled, then eventually he will probably be able to guess at least one password. Automating password checking across the web is very simple! Password lockout mechanisms should be employed that lock out an account if more than a preset number of unsuccessful login attempts are made. A suitable number would be five.

Password lockout mechanisms do have a drawback, however. It is conceivable that an adversary can try a large number of random passwords on known account names, thus locking out entire systems of users. Given that the intent of a password lockout system is to protect from brute-force attacks, a sensible strategy is to lockout accounts for a number of hours. This significantly slows down attackers, while allowing the accounts to be open for legitimate users.

Rotating passwords is generally good practice. This gives valid passwords a limited life cycle. Of course, if a compromised account is asked to refresh its password then there is no advantage.

Automated password reset systems are common. They allow users to reset their own passwords without the latency of calling a support organization. They clearly pose some security risks in that a password needs to be issued to a user who cannot authenticate himself.

There are several strategies for doing this. One is to ask a set of questions during registration that can be asked of someone claiming to be a specific user. These questions should be free form, i.e., the application should allow the user to choose his own question and the corresponding answer rather than selecting from a set of predetermined questions. This typically generates significantly more entropy.

Care should be taken to never render the questions and answers in the same session for confirmation; i.e., during registration either the question or answer may be echoed back to the client, but never both.

If a system utilizes a registered email address to distribute new passwords, the password should be set to change the first time the new user logs on with the changed password.

It is usually good practice to confirm all password management changes to the registered email address. While email is inherently insecure and this is certainly no guarantee of notification, it is significantly harder for an adversary to be able to intercept the email consistently.

In highly secure systems passwords should only be sent via a courier mechanism or reset with solid proof of identity. Processes such as requiring valid government ID to be presented to an account administrator are common.

With outsourcing, hosting and ASP models becoming more prevalent, facilitating a single sign-on experience to users is becoming more desirable. The Microsoft Passport and Project Liberty schemes will be discussed in future revisions of this document.

Many web applications have relied on SSL as providing sufficient authentication for two servers to communicate and exchange trusted user information to provide a single sign on experience. On the face of it this would appear sensible. SSL provides both authentication and protection of the data in transit.

However, poorly implemented schemes are often susceptible to man in the middle attacks. A common scenario is as follows:

The common problem here is that the designers typically rely on the fact that SSL will protect the payload in transit and assumes that it will not be modified. He of course forgets about the malicious user. If the token consists of a simple username then the attacker can intercept the HTTP 302 redirect in a Man-in-the-Middle attack, modify the username and send the new request. To do secure single sign-on the token must be protected outside of SSL. This would typically be done by using symmetric algorithms and with a pre-exchanged key and including a time-stamp in the token to prevent replay attacks.

Table of Contents

HTTP is a stateless protocol, meaning web servers respond to client requests without linking them to each other. Applying a state mechanism scheme allows a user's multiple requests to be associated with each other across a "session." Being able to separate and recognize users' actions to specific sessions is critical to web security. While a preferred cookie mechanism (RFC 2965) exists to build session management systems, it is up to a web designer / developer to implement a secure session management scheme. Poorly designed or implemented schemes can lead to compromised user accounts, which in too many cases may also have administrative privileges.

For most state mechanism schemes, a session token is transmitted between HTTP server and client. Session tokens are often stored in cookies, but also in static URLs, dynamically rewritten URLs, hidden in the HTML of a web page, or some combination of these methods.

Love 'em or loath them, cookies are now a requisite for use of many online banking and e-commerce sites. Cookies were never designed to store usernames and passwords or any sensitive information. Being attenuated to this design decision is helpful in understanding how to use them correctly. Cookies were originally introduced by Netscape and are now specified in RFC 2965 (which supersedes RFC 2109), with RFC 2964 and BCP44 offering guidance on best practice. There are two categories of cookies, secure or non-secure and persistent or non-persistent, giving four individual cookies types.

Persistent and Secure

Persistent and Non-Secure

Non-Persistenet and Secure

Non-Persistent and Non-Secure

Persistent cookies are stored in a text file (cookies.txt under Netscape and multiple *.txt files for Internet Explorer) on the client and are valid for as long as the expiry date is set for (see below). Non-Persistent cookies are stored in RAM on the client and are destroyed when the browser is closed or the cookie is explicitly killed by a log-off script.

Secure cookies can only be sent over HTTPS (SSL). Non-Secure cookies can be sent over HTTPS or regular HTTP. The title of secure is somewhat misleading. It only provides transport security. Any data sent to the client should be considered under the total control of the end user, regardless of the transport mechanism in use.

Cookies can be set using two main methods, HTTP headers and JavaScript. JavaScript is becoming a popular way to set and read cookies as some proxies will filter cookies set as part of an HTTP response header. Cookies enable a server and browser to pass information among themselves between sessions. Remembering HTTP is stateless, this may simply be between requests for documents in a same session or even when a user requests an image embedded in a page. It is rather like a server stamping a client, and saying show this to me next time you come in. Cookies can not be shared (read or written) across DNS domains. In correct client operation Ddomain A can't read Domain B's cookies, but there have been many vulnerabilities in popular web clients which have allowed exactly this. Under HTTP the server responds to a request with an extra header. This header tells the client to add this information to the client's cookies file or store the information in RAM. After this, all requests to that URL from the browser will include the cookie information as an extra header in the request.

A typical cookie used to store a session token (for redhat.com for example) looks much like:

Table�7.1.�Structure Of A Cookie

| Domain | Flag | Path | Secure | Expiration | Name | Value |

|---|---|---|---|---|---|---|

| www.redhat.com | FALSE | / | FALSE | 1154029490 | Apache | 64.3.40.151.16018996349247480 |

The columns above illustrate the six parameters that can be stored in a cookie.

From left-to-right, here is what each field represents:

domain: The website domain that created and that can read the variable.

flag: A TRUE/FALSE value indicating whether all machines within a given domain can access the variable.

path: The path attribute supplies a URL range for which the cookie is valid. If path is set to /reference, the cookie will be sent for URLs in /reference as well as sub-directories such as/reference/webprotocols. A pathname of " / " indicates that the cookie will be used for all URLs at the site from which the cookie originated.

secure: A TRUE/FALSE value indicating if an SSL connection with the domain is needed to access the variable.

expiration: The Unix time that the variable will expire on. Unix time is defined as the number of seconds since 00:00:00 GMT on Jan 1, 1970. Omitting the expiration date signals to the browser to store the cookie only in memory; it will be erased when the browser is closed.

name: The name of the variable (in this case Apache).

So the above cookie value of Apache equals 64.3.40.151.16018996349247480 and is set to expire on July 27, 2006, for the website domain at http://www.redhat.com.

The website sets the cookie in the user's browser in plaintext in the HTTP stream like this:

Set-Cookie: Apache="64.3.40.151.16018996349247480"; path="/"; domain="www.redhat.com"; path_spec; expires="2006-07-27 19:39:15Z"; version=0

The limit on the size of each cookie (name and value combined) is 4 kb.

A maximum of 20 cookies per server or domain is allowed.

All session tokens (independent of the state mechanisms) should be user unique, non-predictable, and resistant to reverse engineering. A trusted source of randomness should be used to create the token (like a pseudo-random number generator, Yarrow, EGADS, etc.). Additionally, for more security, session tokens should be tied in some way to a specific HTTP client instance to prevent hijacking and replay attacks. Examples of mechanisms for enforcing this restriction may be the use of page tokens which are unique for any generated page and may be tied to session tokens on the server. In general, a session token algorithm should never be based on or use as variables any user personal information (user name, password, home address, etc.)

Even the most cryptographically strong algorithm still allows an active session token to be easily determined if the keyspace of the token is not sufficiently large. Attackers can essentially "grind" through most possibilities in the token's key space with automated brute force scripts. A token's key space should be sufficiently large enough to prevent these types of brute force attacks, keeping in mind that computation and bandwith capacity increases will make these numbers insufficieint over time.

Session tokens that do not expire on the HTTP server can allow an attacker unlimited time to guess or brute force a valid authenticated session token. An example is the "Remember Me" option on many retail websites. If a user's cookie file is captured or brute-forced, then an attacker can use these static-session tokens to gain access to that user's web accounts. Additionally, session tokens can be potentially logged and cached in proxy servers that, if broken into by an attacker, may contain similar sorts of information in logs that can be exploited if the particular session has not been expired on the HTTP server.

To prevent Session Hijacking and Brute Force attacks from occurring to an active session, the HTTP server can seamlessly expire and regenerate tokens to give an attacker a smaller window of time for replay exploitation of each legitimate token. Token expiration can be performed based on number of requests or time.

Many websites have prohibitions against unrestrained password guessing (e.g., it can temporarily lock the account or stop listening to the IP address). With regard to session token brute-force attacks, an attacker can probably try hundreds or thousands of session tokens embedded in a legitimate URL or cookie for example without a single complaint from the HTTP server. Many intrusion-detection systems do not actively look for this type of attack; penetration tests also often overlook this weakness in web e-commerce systems. Designers can use "booby trapped" session tokens that never actually get assigned but will detect if an attacker is trying to brute force a range of tokens. Resulting actions can either ban originating IP address (all behind proxy will be affected) or lock out the account (potential DoS). Anomaly/misuse detection hooks can also be built in to detect if an authenticated user tries to manipulate their token to gain elevated privileges.

Critical user actions such as money transfer or significant purchase decisions should require the user to re-authenticate or be reissued another session token immediately prior to significant actions. Developers can also somewhat segment data and user actions to the extent where re-authentication is required upon crossing certain "boundaries" to prevent some types of cross-site scripting attacks that exploit user accounts.

If a session token is captured in transit through network interception, a web application account is then trivially prone to a replay or hijacking attack. Typical web encryption technologies include but are not limited to Secure Sockets Layer (SSLv2/v3) and Transport Layer Security (TLS v1) protocols in order to safeguard the state mechanism token.

With the popularity of Internet Kiosks and shared computing environments on the rise, session tokens take on a new risk. A browser only destroys session cookies when the browser thread is torn down. Most Internet kiosks maintain the same browser thread. It is therefore a good idea to overwrite session cookies when the user logs out of the application.

Page specific tokens or "nonces" may be used in conjunction with session specific tokens to provide a measure of authenticity when dealing with client requests. Used in conjunction with transport layer security mechanisms, page tokens can aide in ensuring that the client on the other end of the session is indeed the same client which requested the last page in a given session. Page tokens are often stored in cookies or query strings and should be completely random. It is possible to avoid sending session token information to the client entirely through the use of page tokens, by creating a mapping between them on the server side, this technique should further increase the difficulty in brute forcing session authentication tokens.

The Secure Socket Layer protocol or SSL was designed by Netscape and included in the Netscape Communicator browser. SSL is probably the widest spoken security protocol in the world and is built in to all commercial web browsers and web servers. The current version is Version 2. As the original version of SSL designed by Netscape is technically a proprietary protocol the Internet Engineering Task Force (IETF) took over responsibilities for upgrading SSL and have now renamed it TLS or Transport Layer Security. The first version of TLS is version 3.1 and has only minor changes from the original specification.

SSL can provide three security services for the transport of data to and from web services. Those are:

Authentication

Confidentiality

Integrity

Contrary to the unfounded claims of many marketing campaigns, SSL alone does not secure a web application! The phrase "this site is 100% secure, we use SSL" can be misleading! SSL only provides the services listed above. SSL/TLS provide no additional security once data has left the IP stack on either end of a connection. All flaws in execution environments which use SSL for session transport are in no way abetted or mitigated through the use of SSL.

SSL uses both public key and symmetric cryptography. You will often here SSL certificates mentioned. SSL certificates are X.509 certificates. A certificate is a public key that is signed by another trusted user (with some additional information to validate that trust).

For the purpose of simplicity we are going to refer to both SSL and TLS as SSL in this section. A more complete treatment of these protcols can be found in Stephen Thomas's "SSL and TLS Essentials".

SSL has two major modes of operation. The first is where the SSL tunnel is set up and only the server is authenticated, the second is where both the server and client are authenticated. In both cases the SSL session is setup before the HTTP transaction takes place.

SSL negotiation with server authentication only is a nine-step process.

The first step in the process is for the client to send the server a Client Hello message. This hello message contains the SSL version and the cipher suites the client can talk. The client sends its maximum key length details at this time.

The server returns the hello message with one of its own in which it nominates the version of SSL and the ciphers and key lengths to be used in the conversation, chosen from the choice offered in the client hello.

The server sends its digital certificate to the client for inspection. Most modern browsers automatically check the certificate (depending on configuration) and warn the user if it's not valid. By valid we mean if it does not point to a certification authority that is explicitly trusted or is out of date, etc.

The server sends a server done message noting it has concluded the initial part of the setup sequence.

The client generates a symmetric key and encrypts it using the server's public key (cert). It then sends this message to the server.

The client sends a cipher spec message telling the server all future communication should be with the new key.

The client now sends a Finished message using the new key to determine if the server is able to decrypt the message and the negotiation was successful.

The server sends a Change Cipher Spec message telling the client that all future communications will be encrypted.

The server sends its own Finished message encrypted using the key. If the client can read this message then the negotiation is successfully completed.

SSL negotiation with mutual authentication (client and server) is a twelve-step process.

The additional steps are;

4.) The server sends a Certificate request after sending its own certificate.

6.) The client provides its Certificate.

8.) The client sends a Certificate verify message in which it encrypts a known piece of plaintext using its private key. The server uses the client certificate to decrypt, therefore ascertaining the client has the private key.

Table of Contents

Access control mechanisms are a necessary and crucial design element to any application's security. In general, a web application should protect front-end and back-end data and system resources by implementing access control restrictions on what users can do, which resources they have access to, and what functions they are allowed to perform on the data. Ideally, an access control scheme should protect against the unauthorized viewing, modification, or copying of data. Additionally, access control mechanisms can also help limit malicious code execution, or unauthorized actions through an attacker exploiting infrastructure dependencies (DNS server, ACE server, etc.).

Authorization and Access Control are terms often mistakenly interchanged. Authorization is the act of checking to see if a user has the proper permission to access a particular file or perform a particular action, assuming that user has successfully authenticated himself. Authorization is very much credential focused and dependent on specific rules and access control lists preset by the web application administrator(s) or data owners. Typical authorization checks involve querying for membership in a particular user group, possession of a particular clearance, or looking for that user on a resource's approved access control list, akin to a bouncer at an exclusive nightclub. Any access control mechanism is clearly dependent on effective and forge-resistant authentication controls used for authorization.

Access Control refers to the much more general way of controlling access to web resources, including restrictions based on things like the time of day, the IP address of the HTTP client browser, the domain of the HTTP client browser, the type of encryption the HTTP client can support, number of times the user has authenticated that day, the possession of any number of types of hardware/software tokens, or any other derived variables that can be extracted or calculated easily.

Before choosing the access control mechanisms specific to your web application, several preparatory steps can help expedite and clarify the design process;

Try to quantify the relative value of information to be protected in terms of Confidentiality, Sensitivity, Classification, Privacy, and Integrity related to the organization as well as the individual users. Consider the worst case financial loss that unauthorized disclosure, modification, or denial of service of the information could cause. Designing elaborate and inconvenient access controls around unclassified or non-sensitive data can be counterproductive to the ultimate goal or purpose of the web application.

Determine the relative interaction that data owners and creators will have within the web application. Some applications may restrict any and all creation or ownership of data to anyone but the administrative or built-in system users. Are specific roles required to further codify the interactions between different types of users and administrators?

Specify the process for granting and revoking user access control rights on the system, whether it be a manual process, automatic upon registration or account creation, or through an administrative front-end tool.

Clearly delineate the types of role driven functions the application will support. Try to determine which specific user functions should be built into the web application (logging in, viewing their information, modifying their information, sending a help request, etc.) as well as administrative functions (changing passwords, viewing any users data, performing maintenance on the application, viewing transaction logs, etc.).

Try to align your access control mechanisms as closely as possible to your organization's security policy. Many things from the policy can map very well over to the implementation side of access control (acceptable time of day of certain data access, types of users allowed to see certain data or perform certain tasks, etc.). These types of mappings usually work the best with Role Based Access Control.

There are a plethora of accepted access control models in the information security realm. Many of these contain aspects that translate very well into the web application space, while others do not. A successful access control protection mechanism will likely combine aspects of each of the following models and should be applied not only to user management, but code and application integration of certain functions.

Discretionary Access Control (DAC) is a means of restricting access to information based on the identity of users and/or membership in certain groups. Access decisions are typically based on the authorizations granted to a user based on the credentials he presented at the time of authentication (user name, password, hardware/software token, etc.). In most typical DAC models, the owner of information or any resource is able to change its permissions at his discretion (thus the name). DAC has the drawback of the administrators not being able to centrally manage these permissions on files/information stored on the web server. A DAC access control model often exhibits one or more of the following attributes.

Data Owners can transfer ownership of information to other users

Data Owners can determine the type of access given to other users (read, write, copy, etc.)

Repetitive authorization failures to access the same resource or object generates an alarm and/or restricts the user's access

Special add-on or plug-in software required to apply to an HTTP client to prevent indiscriminant copying by users ("cutting and pasting" of information)

Users who do not have access to information should not be able to determine its characteristics (file size, file name, directory path, etc.)

Access to information is determined based on authorizations to access control lists based on user identifier and group membership.

Mandatory Access Control (MAC) ensures that the enforcement of organizational security policy does not rely on voluntary web application user compliance. MAC secures information by assigning sensitivity labels on information and comparing this to the level of sensitivity a user is operating at. In general, MAC access control mechanisms are more secure than DAC yet have trade offs in performance and convenience to users. MAC mechanisms assign a security level to all information, assign a security clearance to each user, and ensure that all users only have access to that data for which they have a clearance. MAC is usually appropriate for extremely secure systems including multilevel secure military applications or mission critical data applications. A MAC access control model often exhibits one or more of the following attributes.

Only administrators, not data owners, make changes to a resource's security label.

All data is assigned security level that reflects its relative sensitivity, confidentiality, and protection value.

All users can read from a lower classification than the one they are granted (A "secret" user can read an unclassified document).

All users can write to a higher classification (A "secret" user can post information to a Top Secret resource).

All users are given read/write access to objects only of the same classification (a "secret" user can only read/write to a secret document).

Access is authorized or restricted to objects based on the time of day depending on the labeling on the resource and the user's credentials (driven by policy).

Access is authorized or restricted to objects based on the security characteristics of the HTTP client (e.g. SSL bit length, version information, originating IP address or domain, etc.)

In Role-Based Access Control (RBAC), access decisions are based on an individual's roles and responsibilities within the organization or user base. The process of defining roles is usually based on analyzing the fundamental goals and structure of an organization and is usually linked to the security policy. For instance, in a medical organization, the different roles of users may include those such as doctor, nurse, attendant, nurse, patients, etc. Obviously, these members require different levels of access in order to perform their functions, but also the types of web transactions and their allowed context vary greatly depending on the security policy and any relevant regulations (HIPAA, Gramm-Leach-Bliley, etc.).

An RBAC access control framework should provide web application security administrators with the ability to determine who can perform what actions, when, from where, in what order, and in some cases under what relational circumstances. http://csrc.nist.gov/rbac/ provides some great resources for RBAC implementation. The following aspects exhibit RBAC attributes to an access control model.

Roles are assigned based on organizational structure with emphasis on the organizational security policy

Roles are assigned by the administrator based on relative relationships within the organization or user base. For instance, a manager would have certain authorized transactions over his employees. An administrator would have certain authorized transactions over his specific realm of duties (backup, account creation, etc.)

Each role is designated a profile that includes all authorized commands, transactions, and allowable information access.

Roles are granted permissions based on the principle of least privilege.

Roles are determined with a separation of duties in mind so that a developer Role should not overlap a QA tester Role.

Roles are activated statically and dynamically as appropriate to certain relational triggers (help desk queue, security alert, initiation of a new project, etc.)

Roles can be only be transferred or delegated using strict sign-offs and procedures.

Roles are managed centrally by a security administrator or project leader.

Table of Contents

Logging is essential for providing key security information about a web application and its associated processes and integrated technologies. Generating detailed access and transaction logs is important for several reasons:

Logs are often the only record that suspicious behavior is taking place, and they can sometimes be fed real-time directly into intrusion detection systems.

Logs can provide individual accountability in the web application system universe by tracking a user's actions.

Logs are useful in reconstructing events after a problem has occurred, security related or not. Event reconstruction can allow a security administrator to determine the full extent of an intruder's activities and expedite the recovery process.